|

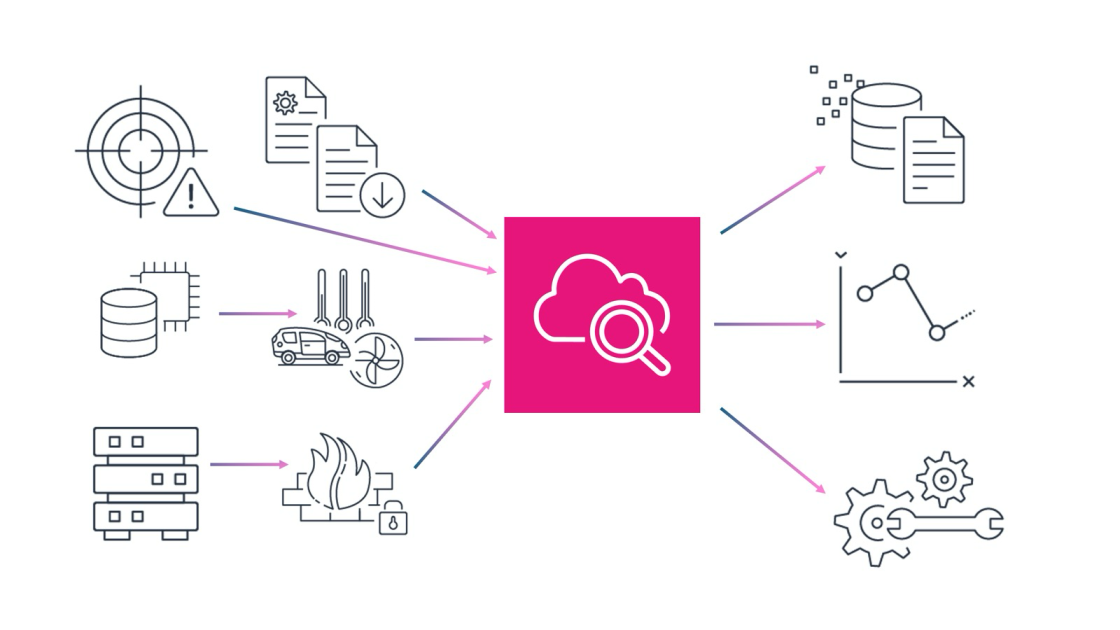

Today, we’re expanding the capabilities of Amazon CloudWatch to unify and manage log data across operational, security, and compliance cases with flexible, powerful analytics in one place, and with reduced data duplication and costs.

This enhancement means CloudWatch can automatically normalize and process data to offer consistency across sources with built-in support for Open Cybersecurity Schema Framework (OCSF) and Open Telemetry (OTel) formats, so you can focus on analytics and insights. CloudWatch also introduces Apache Iceberg-compatible access to your data through Amazon Simple Storage Service (Amazon S3) tables, so you can run analytics not only locally, but also using Amazon Athena, Amazon SageMaker Unified Studio, or any other Iceberg-compatible tool.

You can also correlate your operational data in CloudWatch with other business data from your preferred tools and correlate with other data. This unified approach simplifies management and provides end-to-end correlation across security, operations and business use cases.

Here are the detailed improvements:

- Simplify data intake and normalization – CloudWatch automatically collects sold AWS logs across AWS accounts and regions and integrates with AWS organizations from AWS services including AWS CloudTrail, Amazon Virtual Private Cloud (Amazon VPC) flow logs, AWS WAF access logs, Amazon Route 53 translator logs, and pre-built connectors for third-party resources (SentineStrike endpoint, Entrarow ID), cloud security (Wiz), network security (Zscaler, Palo Alto Networks), productivity and collaboration (Microsoft Office 365, Windows Event Logs and GitHub), along with IT Service Manager with ServiceNow CMDB. To normalize and process your data as it is processed, CloudWatch offers managed OCSF conversion for various AWS and third-party data sources, and other processors such as Grok for custom analysis, array-level operations, and string manipulation.

- Reduce costly log data management – CloudWatch consolidates log management into a single service with built-in management features without storing and maintaining multiple copies of the same data in different tools and data repositories. CloudWatch’s unified data store eliminates the need for complex ETL pipelines and reduces your operating costs and management overhead required to maintain multiple separate data stores and tools.

- Discover business insights from log data – In CloudWatch, you can run queries using natural language queries and popular query languages like LogsQL, PPL, and SQL through a single interface, or you can query your data with your preferred analytics tools through Apache Iceberg-compatible tables. The new Facets interface gives you intuitive filtering by source, application, account, region, and protocol type that you can use to run queries across protocol groups of multiple AWS accounts and regions with intelligent parameter derivation.

In the next sections, we’ll explore the new log management and analytics features of CloudWatch Logs!

1. Finding and managing data according to data sources and types

Get a high-level overview of logs and all data sources with the new log management view in the CloudWatch console. To get started, go to the CloudWatch console and select Log management under Protocols menu in the left navigation bar. IN Summary you can monitor log data sources and types, information about how your log groups are performing in processing, and anomalies.

Select Data sources you can find and manage log data by data source, type, and field. CloudWatch processes and automatically categorizes data sources by AWS services, third parties, or proprietary sources such as application logs.

Select Data Source Actions integrate S3 tables to create future logs for selected data sources. You have the flexibility to analyze logs through Athena and Amazon Redshift and other query tools like Spark using Iceberg-compatible access patterns. This integration makes logs from CloudWatch available in a read-only format aws-cloudwatch Table bucket S3.

When you select a specific data source, such as CloudTrail data, you can view details about the data source, which includes information about the data format, channel, facet/array indexes, S3 table associations, and the number of logs with that data source. With new schema support, you can track all log groups included in that data source and write and edit source/type field index policies.

To learn more about how to manage your data sources and indexing policies, visit Data Sources in the Amazon CloudWatch Logs User Guide.

2. Ingest and transform using the CloudWatch pipeline

You can create pipelines to streamline the collection, transformation, and routing of telemetry and security data, while standardizing data formats to optimize security data traceability and management. The new CloudWatch pipeline feature connects data from a catalog of data sources, so you can add and configure pipeline processors from a library for data analysis, enrichment, and standardization.

IN Conduit tab, select Add pipe. The Pipeline Configuration Wizard will appear. This guide takes you through five steps where you can select a data source and other details about the source such as log source types, configure a target, configure up to 19 processors to perform an action on your data (such as filtering, transforming or enriching) and finally inspect and deploy the pipeline.

You also have the option to create a pipeline through a new one Ingestion experience with CloudWatch. To learn more about how to set up and manage pipelines, visit Pipelines in the Amazon CloudWatch Logs User Guide.

3. Improved analytics and querying based on data sources

You can enhance your analysis by supporting facets and querying based on data sources. Facets allow interactive exploration and browsing of logs and their values are automatically extracted based on the selected time period.

Select Facets card in Log Insights under Protocols menu in the left navigation bar. You can view the available facets and the values that appear on the panel. Choose one or more aspects and values to interactively explore your data. I will select the VPC Flow Logs Group and Action Facets, query a list of the top five patterns in my VPC Flow Logs using the AI query generator, and get the pattern results.

You can save your query with the selected facets and values you entered. The next time you select a saved query, the logs to query will have predefined aspects and values. To learn more about managing facets, visit Facets in the CloudWatch Logs User Guide.

As I noted earlier, you can integrate data sources into S3 tables and query them together. For example, using the query editor in Athena, you can query the correlation of network traffic with AWS API activity from a specific IP range (174.163.137.*) by combining VPC Flow logs with CloudTrail logs based on matching source IP addresses.

This type of integrated search is particularly valuable for security monitoring, incident investigation, and suspicious behavior detection. You can see if an IP that makes network connections is also performing sensitive AWS operations, such as creating users, editing security groups, or accessing data.

To learn more, visit the S3 Tables integration with CloudWatch in the CloudWatch Logs user guide.

Now available

The new Amazon CloudWatch log management features are available today in all AWS regions except the AWS GovCloud (US) and China regions. See AWS Capabilities by Region for regional availability and future plans. There are no upfront commitments or minimum fees, and you pay to use existing CloudWatch protocols for data ingestion, storage, and querying. To learn more, visit the CloudWatch pricing page.

Try it in the CloudWatch console. To learn more, visit the CloudWatch product page and submit feedback on AWS re:Post for CloudWatch Logs or through your usual AWS support contacts.

— Channy